It's 10 years since the groundbreaking Melodyne audio-processing software was launched. To celebrate, its inventor Peter Neubäcker invited us into his research lab to talk about possible future developments.

It has been a decade since the company Celemony was founded, and there's certainly every reason for them to celebrate this year on the occasion of their 10th anniversary. Not only have they consistently led the field in the field of monophonic pitch and time manipulation with the first three versions of their Melodyne software, but they've also rewritten the studio rulebook with their Direct Note Access (DNA) algorithm. This can manipulate individual notes within a mixed polyphonic audio file — a feat that even now remains out of reach of any of their commercial competitors.

The main technological brains behind this success are those of Peter Neubäcker, who I recently took the opportunity to interview in his home town of Munich as part of the company festivities. Surveying this softly spoken man's CV, it's fair to say that the average careers advisor wouldn't exactly have singled him out as a successful IT entrepreneur: a sometime hippie, luthier, and astrologer, by the early '80s Neubäcker had already been thrown out of school for playing truant, narrowly escaped six months in prison for refusing to do his compulsory military/community service, and built an alchemical laboratory in his basement!

Yet geometry and music appear to have threaded their way continually through his life, finally finding a focus in the study of harmonics, a subject examining numerical and musical relationships and championed by the Viennese Professor Rudolf Haase. It was Neubäcker's research interest in harmonics that first led him to experiment with computers, first the Atari, and then with CSound on the NeXT computer platform.

During the mid‑'90s, he met Celemony's fellow‑founder (and now Technical Director) Carsten Gehle through their common interest in NeXT programming, and when the idea of Melodyne hit, the two of them decided to put their heads together to bring a product to market. "I had no experience whatsoever in the field of professional software development, and had totally underestimated the amount of work involved in going from a functioning prototype to an actual product,” admits Neubäcker candidly, "[so] I would have failed hopelessly in this endeavour had it not been for [Carsten] and his gift for structured thinking and solidly based software design.”

From then on, most studio jockeys are familiar with Celemony's rise and rise. First there was the original stand‑alone Melodyne in 2001; then two subsequent versions that integrated with standard MIDI + Audio sequencers via the Melodyne Bridge plug‑in; then a bona fide, all‑in‑one Melodyne plug‑in; and finally the release, in late 2009, of the DNA‑powered Melodyne Editor plug‑in.

In Search Of DNA

Neubäcker has always been very forthcoming in explaining how his processing algorithms work, and has already said much about the way the original Melodyne algorithm works: roughly speaking, it isolates periodic waveform elements to create a series of stand‑alone snapshots of the audio signal (a bit like synthesizer wavetables), each of which represents the 'local sound' at a particular point in time. What quickly becomes clear as our conversation gets under way, however, is that the new polyphonic Melodyne algorithm isn't just an extrapolation on that approach, but had to be developed from scratch. "It's impossible to have the original idea of the 'local sound' in polyphonic mode,” explains Neubäcker. "For the original Melodyne, I made the detection only in the time domain, not in the frequency domain, because I looked for periodicities. For polyphonic material, that's not possible any more, because there's no periodicity. It's not that simple. Yes, you can shift polyphonic material en masse with existing techniques, such as phase vocoding, but when it comes to separating out single notes from a polyphonic source, you have a mix of overtones and no means of finding out which overtone belongs to which note — in other words, which overtone is a fundamental and which is a harmonic. The greatest difficulty is to assign one part of an overtone to one note, and another part to another.”

Even in the face of scepticism from many of his fellow software developers, Neubäcker nonetheless decided to actively hunt for a true polyphonic algorithm, and although progress turned out to be quicker than he'd expected, it was nonetheless still quite a long process. "But not of coding, just of thinking,” stresses Neubäcker. "I was thinking, if a Fourier Transform gives me the signal's spectral components, and if we, as listeners, can hear what is in there, then there must be a way to separate them, if not totally, then at least to that degree that we can get control over the important parts.”

An important factor in solving this problem was the development of a method of detecting the notes within the audio, because the relevance of the different signal overtones could then be rated in relation to those notes. "On a spectrogram I see what I see, and I can interpret it in a way, but to make the program find what I see, I have to find a way to tell it that 'this is an object', 'this is a note', so that then the most relevant parts can be followed. The most difficult part of the work is to find out what is relevant and what is noise, because there may be musical notes that are very relevant, but quieter than any noise. There are things that we hear that are on a higher level of importance, and there are things that are not. If there's a little harmonic somewhere that's hardly audible and you can't decide if it belongs to this note or to that note, it really doesn't matter, because it's not so important.”

The Problem Of Spill

The issue of 'relevance' continues to exercise Peter Neubäcker as he continues his research work. "What I'm interested in at the moment is processing multi‑miked ensemble recordings. When recording a band live, you have all the individual instruments with a lot of spill between them. If you wanted to retune one instrument, you'd have to retune the spill in every other signal. I worked on the last Peter Gabriel CD, for example, which had a horn section on it that they wanted to retune. It was a very typical situation: they had five horns and each one had a microphone of its own, but there was a lot of spill, of course. If you retuned any individual mic, you'd have had to manually retune that spill on the other instruments by the same amount.

"My idea is that if you analyse each different microphone, the system can work out which is the main voice in that one, and handle the other signals appropriately. It doesn't remove the spill — it only does it virtually for the user. They'll only see the saxophone voice they're processing, and won't be seeing the spill, because that will be handled automatically. You just grab that instrument and move it around, and the system knows which spill notes on the other microphones have to be adjusted. But it's at the laboratory stage at the moment — what we had to do with this Peter Gabriel recording was mix it down to a stereo file to retune it, which meant that they didn't have to retune every file separately. But that meant they couldn't then re-use the individual files for a 5.1 mix or whatever.”

In discussing his ideas for dealing with spill, Neubäcker played me a few examples he'd been using in his research, and already at its current stage of development it appeared to be distinguishing between spill and non‑spill signals very effectively. The most impressive example was where six young choristers singing in unison had each had their own mic, and the algorithm seemed able to distinguish between their very similar on‑mic and off‑mic sounds remarkably accurately.  Here are a couple of spectrograms from the analysis software in Peter Neubäcker's own laboratory. The left one shows a section of one of the microphone signals from a unison boys' choir recording in which each of six choristers had his own mic. Notice the complicated overlapping spill signals, which can make note detection very unreliable. By comparing the different microphone signals, however, Neubäcker's prototype 'relevance evaluation' technology can much more reliably separate the wanted signal from the spill for note-detection purposes, as you can see in the right spectrogram.

Here are a couple of spectrograms from the analysis software in Peter Neubäcker's own laboratory. The left one shows a section of one of the microphone signals from a unison boys' choir recording in which each of six choristers had his own mic. Notice the complicated overlapping spill signals, which can make note detection very unreliable. By comparing the different microphone signals, however, Neubäcker's prototype 'relevance evaluation' technology can much more reliably separate the wanted signal from the spill for note-detection purposes, as you can see in the right spectrogram.

"Of course this couldn't be in Melodyne Editor, because it's just one track,” adds Neubäcker, "so it would have to be in a later version. An important aim for Melodyne Studio in the future is that there are tracks that 'know' each other, and know their context. In that future version there would be 'group tracks', where you would have to specify that certain recordings belong together, so that they are analysed together.”

Developments In Note Detection & Noise Handling

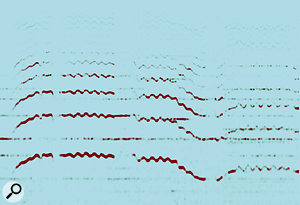

Additional research is also going into improving the software's polyphonic note‑detection process in response to more expressive internal lines. "If you have a better detection of the pitch curves,” says Neubäcker, "the processing of the sound will be better as well. A pitch course with a lot of vibrato or portamento is followed perfectly well by Melodyne's monophonic algorithm, but the DNA technology doesn't yet handle this kind of line very well within a polyphonic context — the vibrato comes out as lots of different notes. Peter Neubäcker is working on improving Melodyne Editor's DNA note detection, which has trouble dealing with wide vibrato in polyphonic audio. In this example, strings of spurious notes are detected in polyphonic audio consisting of two very simple operatic female vocal phrases. While it's already fairly simple to clean up unwanted notes manually, the aim is for Melodyne to get much closer to the desired result automatically. I'm on the way to following the paths of the single instruments now, though, so if there are parts to be found, then that part of the detection will become a lot better. So in future there will be less to do in the Correct Detection mode, because I'm always working on how things can be assigned more plausibly in terms of which are notes and which are not notes.”

Peter Neubäcker is working on improving Melodyne Editor's DNA note detection, which has trouble dealing with wide vibrato in polyphonic audio. In this example, strings of spurious notes are detected in polyphonic audio consisting of two very simple operatic female vocal phrases. While it's already fairly simple to clean up unwanted notes manually, the aim is for Melodyne to get much closer to the desired result automatically. I'm on the way to following the paths of the single instruments now, though, so if there are parts to be found, then that part of the detection will become a lot better. So in future there will be less to do in the Correct Detection mode, because I'm always working on how things can be assigned more plausibly in terms of which are notes and which are not notes.”

It appears that detection may also improve in situations where band‑limited signals have little, if any, fundamental frequencies for their lowest notes. One example Neubäcker played for me was an ancient recording of Caruso warbling over a full orchestra. Even though the recording included hardly any note fundamental at all for Caruso's voice, Neubäcker's improved 'relevance evaluation' routine was nonetheless able to correctly interpret where the note fundamental should have been. "You hardly have any fundamental at all — you only hear the overtones,” he commented. "If I switch on the relevance evaluation, however, you suddenly see it down there. This isn't really in the signal, it's just reconstructed from the overtones. When the path‑following takes place, it will find these things down there as well.” Here are another couple of spectrograms from Peter Neubäcker's laboratory, which demonstrate the potential of his new 'relevance evaluation' algorithm. The left-hand spectrogram shows a raw analysis of an old Caruso recording, in which you can clearly see the pitch contour of Caruso's voice, but with a vanishingly low level of each note's fundamental frequency. The spectrogram on the right shows how the relevance evaluation can nonetheless reliably identify where the fundamentals lie.

Here are another couple of spectrograms from Peter Neubäcker's laboratory, which demonstrate the potential of his new 'relevance evaluation' algorithm. The left-hand spectrogram shows a raw analysis of an old Caruso recording, in which you can clearly see the pitch contour of Caruso's voice, but with a vanishingly low level of each note's fundamental frequency. The spectrogram on the right shows how the relevance evaluation can nonetheless reliably identify where the fundamentals lie.

When I reviewed Melodyne Editor back in SOS December 2009, one of my main criticisms was that Melodyne still struggled to cope with the less predictable noisy elements of musical signals — instrument transients, for example, often seemed to respond unpredictably when you manipulated note timings. Neubäcker suggests that additional control may be forthcoming in this department, via independent manipulation of what he calls the 'remainder signal', in other words the transients and other noises that remain when all the pitched information is removed: "After we introduced DNA processing, more than two years ago, we were not quite sure what the interace would be, and in fact we reduced some of the functionality because we saw that it was a mess to deal with everything, and we needed to gain some more experience of how people would wanted to work with it. Therefore we assigned the remainder signal to the notes that were detected, so that the user didn't have to deal with it. However, if we later introduce another processing level for this, things like a door slam or a click in the audio would get into the remainder track, so you'd have more objects to touch, so that you could say 'I don't want this object' or 'I want it louder', or something.”

Fun as it is to gaze into the Celemony crystal ball, however, Peter Neubäcker is keen to point out that there can be no guarantee of when (or indeed if!) the fruits of his latest research will make their way into new versions of Melodyne. "I'm learning as well,” laughs Neubäcker. "We had a very hard time after we announced DNA, and it took us nearly two years to get to the point of releasing Melodyne Editor. What is going to work and what is not going to work is still in the process. I'm in the happy situation at the moment where I'm more in the research laboratory and other people in the company are working on the concrete products, but we have so many ideas in our research laboratory in different stages of maturity that it's impossible to say with any confidence whether a certain feature will be available in a given version of Melodyne. I like to be very open about what I do, but I just want to prevent people getting expectations about what is to come in case it doesn't come, or doesn't come so soon!”

Perform First, Compose Later

Despite working for more than a decade on Melodyne's core technology, Peter Neubäcker continues to show an unswerving enthusiasm for what he's doing, driven by his desire to remove technological obstacles from the creative process. "The potential of this technology is incredible. I've always thought that if you can get access to every musical element, then it melts together the processes of music production and composition so that it's not important that you have the composition ready in the first place and then record it. You can record something first, and then see what you can compose with it.

"The problem with recording is that music is frozen at a certain time, and there's a very decisive separation of roles between the composer and the performer. We can change this if we give the instrumentalist, or the vocalist, or the musician the freedom to improvise or to be a composer after the performance — to re‑perform the music according to their vision. Maybe the composer has a vision of his music (and I think it's the vision of this music that really matters) that no performer is able to perform? Or maybe some other composer afterwards says 'Oh, it would have been nicer this way'? The musician will have to be less of a technician, and more of a musician. As long as the technology serves the quality of the music, I think it can only make things better.”

Melodyne & Resynthesis

Peter Neubäcker: "In the original Melodyne, the formants were not analysed in terms of individual harmonics, but more in terms of the overall spectral shape. For example, if you retuned the formant in the original Melodyne, you shifted the whole formant spectrum. What we can do now with the new analysis is reshape the spectrum as well. I've done a lot of experimental stuff with it. For example, usually if you have a single monophonic guitar note, Melodyne Editor will select Melodic mode and group all the harmonics together. However, you can get into the field of creative abuse if you manually switch the detection mode to Polyphonic and then label some overtones as notes in themselves — if you retune them, you can get something more like a gong sound than a guitar. You can take a real flute recording, and make it sound more like a synthesizer, but because the original phrasing of the flute is always there, it's more alive than a synthesizer would be. You can turn any sound into a synthesized sound.”

Celemony Melodyne Reviews In SOS

Melodyne: November 2001

/sos/nov01/articles/melodyne.asp

Melodyne 2, featuring Melodyne Bridge: January 2004

/sos/jan04/articles/melodyne2.htm

Melodyne 3: April 2006

/sos/apr06/articles/melodyne.htm

Melodyne Plug-in: March 2007

/sos/mar07/articles/at5vsmelodyne.htm

Melodyne Editor Plug-in: December 2009