Some pieces of bad advice just won’t die, no matter how unhelpful they are. Here’s why you shouldn’t believe everything you read on the Internet...

Myth: Double glazing is good for sound isolation, so triple glazing must be even better.

Adding secondary panels to a single-glazed window gives you the benefit both of an additional layer of glass, and of an air ‘spring’. It’s usually better to make this air gap as wide as possible, rather than using the same space to add further panels.

Adding secondary panels to a single-glazed window gives you the benefit both of an additional layer of glass, and of an air ‘spring’. It’s usually better to make this air gap as wide as possible, rather than using the same space to add further panels.

Fact: Acoustics is rarely intuitive, and this is a classic example of a belief that looks perfectly logical but is usually wrong. The key point to keep in mind with regard to sound isolation is that the entire structure — in this case, the window — must be considered as a system. The amount of isolation afforded by a simple piece of material, such as a sheet of glass, is related to its mass and hence to the thickness of the glass employed. Add a second sheet of the same glass and you double the mass, but this, in and of itself, provides only a relatively small improvement in the amount of isolation. The real reason why double glazing is so much more effective than single is because the air that is trapped between the two panes of glass behaves like a spring, with a corresponding and very effective damping effect that greatly reduces the extent to which vibrations in one pane of glass can be transmitted to the other.

The degree of isolation afforded by a double-glazed window, or indeed any ‘two-leaf’ structure such as a cavity wall, depends partly on the mass of the panels, and partly on the width of the air gap. In particular, as the air gap is narrowed, the effectivenessof the isolation at low frequencies is lost. What this means is that in a window of any given depth, you’ll get better low-frequency isolation by having two panes of glass separated by a wide air gap than by inserting an additional pane in the middle and creating two narrow air gaps. It is true that triple glazing can provide more isolation in the high frequencies, but these are almost never the problem! Sam Inglis

Myth: It’s not safe to use ribbon mics on loud sources, or with phantom power.

Crowley & Tripp’s El Diablo, now sold as the Shure KSM313, was specially designed to counter the common perception that ribbon mics are fragile. Other models are susceptible to air blasts and shocks — but not to loud noises or phantom power!Fact: It is perfectly possible — and safe — to use a ribbon mic under both circumstances, but there are, as always, some caveats.

Crowley & Tripp’s El Diablo, now sold as the Shure KSM313, was specially designed to counter the common perception that ribbon mics are fragile. Other models are susceptible to air blasts and shocks — but not to loud noises or phantom power!Fact: It is perfectly possible — and safe — to use a ribbon mic under both circumstances, but there are, as always, some caveats.

The diaphragm in a ribbon microphone is inherently more delicate than that of a moving coil or capacitor microphone, but this fragility relates primarily to large G-force shocks and strong air currents, which can stretch or even tear the ribbon. In contrast, even loud sounds produce relatively small atmospheric pressure variations, and there are no internal electronics to overload, so reasonably high SPLs really aren’t a problem. Ribbon mics can be and are used in front of brass instruments like trumpets, trombones and saxophones, employed as drum overheads, placed in front of guitar amps, and I’ve even seen them used in front of kick drums (although I wouldn’t personally take it quite that far!).

If you want to use a ribbon mic on a loud source, there are some easy precautions that can be taken to protect the mic. For example, angling the mic at 45 degrees to the sound source can help because moving air then ‘brushes across’ the diaphragm instead of hitting it straight-on, reducing the risk of serious damage considerably. (Placing the source this far off-axis usually only has a minimal effect on the frequency response with a fig-8 mic). Secondly, a large pop-screen can be placed an inch or two in front of the microphone to protect it from any strong air blasts sent its way!

The phantom power myth pertains to two very specific and (hopefully) very rare situations. If the microphone is connected with a faulty cable where one of the signal wires is shorted to the screen, there will be 48V phantom on one end of the output transformer and 0V at the other. A current will therefore flow through the transformer, which may cause magnetic saturation (making the mic sound very thin and bass-less), and may even permanently magnetise it. The same current can result in a motive force being applied to the ribbon itself, with the potential to damage or destroy it. The second possibility concerns vintage ribbon mics, some of which employed centre-tapped output transformers with a centre ground connection. With phantom power applied a current will flow through each half of the output transformer to the centre ground, potentially causing damage.

To guard against both of these problems it is only necessary to check and maintain mic cables regularly, and make sure you know how an unfamiliar or vintage microphone is wired before using it. I spent my entire BBC career routinely ‘hot-plugging’ ribbon mics in studios with phantom power supplied permanently from the wall boxes, and no ribbon mics were ever harmed in the making of those BBC programmes! Hugh Robjohns

Myth: Capacitor mics ‘pick up more of the room’ than dynamic mics.

If mics have the same polar pattern and are placed at the same distance from the source, they’ll pick up the same balance of direct and reflected sound, no matter whether they are dynamic or capacitor designs. Fact: Unless it’s in an anechoic chamber, no microphone will pick up only the direct sound coming from a voice or instrument. Some of that sound will always bounce around the room and be captured in reflected form as ambience or reverberation. Assuming the sound source and the room remain unchanged, the balance of reverberation to direct sound that is captured depends on two factors. The first is distance: the closer the mic is to the sound source, the more the direct sound will tend to dominate over ambience. The second is the polar pattern of the microphone, and the position of the sound source in relation to it: pointing a cardioid mic directly at a singer ensures that it is on-axis to direct sound while offering maximum rejection to reflected sound arriving at the back of the mic. When a microphone is being used specifically to capture room ambience, you might wish to do the exact opposite, and ensure that direct sound arrives at the deepest null point of that mic’s polar pattern.

If mics have the same polar pattern and are placed at the same distance from the source, they’ll pick up the same balance of direct and reflected sound, no matter whether they are dynamic or capacitor designs. Fact: Unless it’s in an anechoic chamber, no microphone will pick up only the direct sound coming from a voice or instrument. Some of that sound will always bounce around the room and be captured in reflected form as ambience or reverberation. Assuming the sound source and the room remain unchanged, the balance of reverberation to direct sound that is captured depends on two factors. The first is distance: the closer the mic is to the sound source, the more the direct sound will tend to dominate over ambience. The second is the polar pattern of the microphone, and the position of the sound source in relation to it: pointing a cardioid mic directly at a singer ensures that it is on-axis to direct sound while offering maximum rejection to reflected sound arriving at the back of the mic. When a microphone is being used specifically to capture room ambience, you might wish to do the exact opposite, and ensure that direct sound arrives at the deepest null point of that mic’s polar pattern.

The actual operating principle of the microphone is irrelevant. Assuming that a dynamic and a capacitor mic have the same polar pattern, and that their diaphragms are the same distance from the sound source, they will pick up exactly the same balance of direct to reflected sound. However, this myth does contain a grain of truth. Many of the most commonly used dynamic microphones are designed specifically to be employed very close to their sources. Until recently, by contrast, there have been very few ‘stage capacitor’ microphones intended to be placed right in front of singers’ mouths. So, when used as intended, a Neumann U87 will indeed ‘pick up more room’ than a Shure SM58 — but only because it’s further away from the sound source! Sam Inglis

Myth: Stereo recording can be truly ‘mono-compatible’.

When a stereo recording is collapsed to mono, some of the information is necessarily ‘thrown away’, meaning that it’s impossible to preserve the balance exactly as it was represented in the stereo signal. Ambience is one frequent casualty when this occurs.Fact: This depends on what we understand by the term ‘truly mono-compatible’, but if we mean the ‘mono’ balance sounding identical to the ‘stereo’ balance but narrower, then it is most definitely a myth!

When a stereo recording is collapsed to mono, some of the information is necessarily ‘thrown away’, meaning that it’s impossible to preserve the balance exactly as it was represented in the stereo signal. Ambience is one frequent casualty when this occurs.Fact: This depends on what we understand by the term ‘truly mono-compatible’, but if we mean the ‘mono’ balance sounding identical to the ‘stereo’ balance but narrower, then it is most definitely a myth!

While we usually think of a stereo signal in terms of the left and right halves (LR) of the stereo image, an alternative view is to consider it in terms of the Middle and Sides (MS). The ‘Middle’ signal is the sum of left and right (the mono component), while the Sides is the difference between the left and right channels — meaning anything lurking out towards the edges of the stereo image. In reducing stereo to mono, we hear only the Middle component, and the Sides element is discarded.

Anything panned heavily to one side or the other is carried partially in the Middle signal and partially in the Sides signal, and thus the mix balance will inherently change slightly between the original stereo mix and the derived mono version when those Sides elements are discarded. Anything that exists predominantly in the Sides channel will be lost to the mono listener — and this often includes a large proportion of any reverberation, so the balance of ambience to direct sound is always the most obvious casualty. A stereo mix inherently ‘dries up’ when auditioned in mono.

An alternative use of the term ‘mono-compatibility’ relates to inter-channel timing and phase problems. One means of positioning sounds within the stereo image is to use timing offsets between the two channels, instead of level differences. This occurs naturally when any form of spaced microphone array is used, but also occurs in some forms of stereo signal processing (such as chorus), and commonly with artificial reverberation. When time-variant signals are combined to mono, the resulting comb filtering often creates very audible coloration, and such situations are then said to suffer from ‘mono-compatibility problems’. By using coincident microphone arrays, conventional amplitude-panning, and signal processing techniques that only introduce level differences between the two stereo channels, the risk of comb-filtering coloration in the derived mono signal is eliminated, and hence such techniques are said to be ‘mono-compatible’. Hugh Robjohns

Myth: Professional audio equipment only ever uses balanced connections.

Balanced cables and connectors help to reduce the risk of noise when you’re working in an uncontrolled and unpredictable environment. But a pro studio is a controlled and predictable environment — so balancing should not be necessary.

Balanced cables and connectors help to reduce the risk of noise when you’re working in an uncontrolled and unpredictable environment. But a pro studio is a controlled and predictable environment — so balancing should not be necessary.

Fact: Equipment manufacturers have to design equipment to work reliably in unknown applications where it may be exposed to strong interference, and may be poorly installed in terms of grounding and so on. In such circumstances balanced interfaces are preferred because they greatly reduce the risk of interference and ground loops. However, virtually all electronic audio equipment employs unbalanced signals internally, and in a well-engineered installation, there shouldn’t be any issues with local interference or ground loops. In these ‘controlled conditions’, therefore, unbalanced interconnections are perfectly viable and, in some cases, even preferred — partly because of the fewer active devices required in the signal path and potentially lower noise-floor levels that result. The American mastering engineer Bob Katz details, in his Mastering Audio book, how his own mastering studio employs unbalanced connections in some parts of the signal chain with audible benefits. Hugh Robjohns

Myth: A close mic should be placed three times closer to its target instrument than to any other instrument.

Fact: The so-called ‘3:1 Rule’ is an oft-quoted principle of ensemble miking. The assertion is that following this rule keeps spill from other instruments roughly 9dB lower in level, so that it doesn’t cause too much mischief at the mix. What a lot of people don’t realise about the 3:1 Rule, though, is that it’s based around a number of assumptions:

Relying blindly on any ‘rule’ is likely to lead to disaster. Measuring the distance between your mics and their sources can be useful, but not as useful as using your ears.

Relying blindly on any ‘rule’ is likely to lead to disaster. Measuring the distance between your mics and their sources can be useful, but not as useful as using your ears.

- That the instruments in question project all their frequencies equally in all directions. No instrument does this.

- That all the instruments in question are similarly loud. This is rarely the case — indeed, the whole point of using close mics in many cases is to bolster the level of instruments that are substantially quieter than those around them.

- That the mics pick up all frequencies equally in all directions. Even the best omni microphones don’t this, and definitely not cheap directional types.

- That the mic signals are balanced at identical levels. This is only likely when the two instruments already balance correctly in the recording room — but if you’re using spot mics at all then it’s often because there are balance problems.

- That you’re recording in mid-air, without a floor, ceiling, and walls reflecting sound back into the mics. Admittedly, this one might apply to you if your studio is an anechoic chamber...

But it’s not just these issues that call the 3:1 Rule into question for me. It’s also that I don’t think there can be a ‘correct’ spill level for any spot mic, because the amount of spill is just one variable amongst many that influence your mic-positioning decisions when recording an ensemble. As such, I just don’t find the 3:1 Rule much practical use in real recording situations. It maybe offers beginners the flimsiest of guidelines for initial mic setup, but I wouldn’t suggest giving the 3:1 Rule much credence once you’re on a session, because it’s only your ears that can tell you whether you’ve got too much spill or not. Mike Senior

Myth: It’s essential to use a pop filter when recording vocals.

Fact: As an editor, you occasionally find yourself having to bite your tongue when authors say things you don’t agree with, and something that always gets my molars working is the recommendation to put up a pop shield as standard practice for recording vocals. This is a piece of advice that has appeared regularly in the pages of SOS, and practically everywhere vocal recording is discussed. And, in my view, it’s plain wrong.

Why would you want to put anything between your mic and the thing it’s recording? Pop shields have a place, but they often aren’t needed.Photo: Richard Ecclestone

Why would you want to put anything between your mic and the thing it’s recording? Pop shields have a place, but they often aren’t needed.Photo: Richard Ecclestone

The case against pop filters rests on two points. The first of these is that they have a negative effect on the sound. Now, I confess that I haven’t tried the latest and greatest advances in pop-shield technology, which cost several hundred pounds and are hand knitted from pure science in a Swedish laboratory. However, I have repeatedly noticed that standard nylon pop filters exaggerate sibilance problems, and can introduce comb-filtering and other unwanted artifacts. No-one who cares about sound quality would be so blasé as to record an Amati violin through a double layer of their grandma’s tights, so why do people do this automatically with the most important element of their tracks? The second is that, most of the time, a pop filter isn’t even the best solution to the problem it’s intended to solve. Problems with plosives usually suggest a combination of poor mic technique on behalf of the singer, and inappropriate mic placement on behalf of the engineer. It’s true that there are some circumstances where jamming the mic right into the singer’s teeth is the only workable option, because of the need to minimise room pickup or spill from some other source. It’s also true that by the time a singer is laying down his or her vocal overdubs, it’s probably too late to send them back to mic school. And none of us wants to expose our precious microphone diaphragms to a volley of spittle. But in the majority of cases, a bit more experimentation with mic placement will solve the problem and yield a better vocal sound into the bargain. Take the singer out of the booth, put him or her in the middle of the live room floor, back the mic off and try placing it at eye level looking down: no pops, a natural sound and no more flailing around with EQ to try to remove all that proximity effect bass boost and small-room boxiness.

There is a place for pop filters, when all else fails, but like everything that compromises the sound, pop shields should be a last resort rather than an automatic choice. Sam Inglis

Myth: External clocking will improve the performance of your digital gear.

Fact: In purely technical terms, this is a physical impossibility. A converter will generally perform best when using its internal crystal clock, because slaving to an external clock involves additional clocking circuitry which rarely manages to achieve as stable a lock from an external clocking signal. Moreover, that external clock may well be degraded anyway by the connecting cable, even if the remote clock source is extremely stable and accurate. The best that can be hoped for is that the performance remains the same whether clocked internally or externally — and many converters do manage this perfectly well.

However, as revealed clearly in the ‘Does Your Studio Need A Digital Master Clock?’ article (SOS June 2010), the technical performance of most converters is degraded to some extent when slaved to an external clock. This may become audible as a subtle change of audio character, and some might prefer the result even though the technical performance is measurably degraded — especially if they’ve just paid a small fortune for an unnecessary master clock unit! Hugh Robjohns

Myth: You should always peak signals as close to digital peak level as possible.

Fact: This myth probably stems from the fact that commercial music formats such as CDs have no visible headroom margin: signals appear to peak regularly (even continuously!) at 0dBFS. This is possible because the actual peak level of the music is known, and part of the CD mastering process involves removing the no longer equired headroom margin. However, when tracking and mixing, there are numerous benefits from using a sensible headroom margin and doing so closely replicates the working practices and sonic advantages of analogue systems.

When recording live performances the actual peak level is likely to be unknown, and brief transients can be much higher than most metering systems can show (including the so-called digital sample peak meters found in DAWs). It is therefore sensible to allow a safety headroom margin to cope with these peaks to avoid the risk of overload. Similarly, when mixing multiple channels together the combined signal level will be much higher than the individual tracks, so again some headroom margin is required.

Analogue equipment is configured to hide the headroom margin from the user by employing the concept of an ‘operating level’ (usually 0VU or +4dBu). Analogue meters indicate signal levels in a relatively narrow region around this reference point, without revealing the (typical) 20dB headroom margin before the actual clipping level around +24dBu. In contrast, digital metering has always revealed the entire signal level range, leading to considerable confusion over where the equivalent operating level should be. International standards bodies have made recommendations (EBU suggest -18dBFS, while the SMPTE states -20dBFS), but many people are either ignorant of these or choose to ignore them!

However, by embracing these nominal operating levels and introducing a sensible headroom margin, the recording and mixing process — and sound — becomes much better. In practice, that means maintaining average signal levels around -20dBFS or a little higher, with peaks going no higher than about -10dBFS. When working this way the risk of record clipping vanishes. Mixing no longer requires the main fader to be pulled back to avoid output overloads. The DAW is less likely to indulge in floating-point calculations with the potential for rounding errors and plug-in issues. And even more importantly, the analogue interfacing employed in the front-end mic preamps and back-end monitoring chain work with the kinds of signal levels they were designed for (around +4dBu) instead of always being close to clipping! That condition alone makes most systems sound considerably sweeter and more ‘analogue’.

Some will argue that allowing 20dB of headroom compromises the noise floor by 20dB. This is technically true, but completely irrelevant. The noise floor of even budget 24-bit digital converters will be better than -115dBFS, so leaving a very generous 20dB headroom margin still puts the noise floor -95dB lower than the new operating level. Comparing this to the analogue world, few high-end analogue consoles will have a noise floor below -90dBu, and with clipping at +24dBu the total dynamic range is an almost identical 114dB. In practice, then, the noise performance of a digital system — even with a 20dB headroom margin — is no worse, and will probably be slightly better than that of a high-end analogue system. Hugh Robjohns

Myth: It’s not necessary to clear samples that are less than three seconds long.

Fact: Copyright law relating to sampling varies significantly between the US and the UK, but in both cases, copyright infringement is adjudged to have taken place when a ‘substantial’ part of a work is used without permission. In neither case is ‘substantial’ defined in terms of the duration of the sample! Indeed, in the now-notorious case of Bridgeport Music, Inc. vs Dimension Films, unauthorised use of a two-second guitar chord was deemed a copyright violation. In making this judgement, the US Court of Appeals explicitly rejected the so-called ‘de minimis defence’ that a short sample should not be considered ‘substantial’ merely because of its length. Sam Inglis

Myth: Carpets and eggboxes make very cost-effective acoustic treatment.

Egg boxes: great for packaging eggs, rubbish at absorbing sound!Photo: Hans BraxmeierFact: The usual aim in treating studio acoustics is to achieve a short reverberation time which is well balanced at all frequencies. However, not all sound waves are the same! High frequencies have very short wavelengths (a 16kHz sound has a wavelength of 2.1cm, for example), while low frequencies have very long wavelengths (the wavelength at 40Hz is about 8.6 metres).

Egg boxes: great for packaging eggs, rubbish at absorbing sound!Photo: Hans BraxmeierFact: The usual aim in treating studio acoustics is to achieve a short reverberation time which is well balanced at all frequencies. However, not all sound waves are the same! High frequencies have very short wavelengths (a 16kHz sound has a wavelength of 2.1cm, for example), while low frequencies have very long wavelengths (the wavelength at 40Hz is about 8.6 metres).

Excessive reverberation is usually controlled by absorbing the unwanted sound so that it can’t bounce around in the room. Sound waves are conveyed by the movement of air particles, and all absorbers work by converting the motion of those air particles into heat, generally by forcing them through convoluted paths in foam or mineral wool panels. This energy conversion is most efficient if applied at the point where the air particles are moving the most, and that’s at a quarter of the wavelength away from the room boundary surfaces. Clearly, since the wavelength changes with frequency, so does the optimum position and thickness of absorber.

Very thin absorbers will absorb high frequencies extremely well, but do nothing for low frequencies at all, and that’s where carpet enters the scene. Lining a studio with carpet directly on the walls will only soak up the highest frequencies, leaving all the middle and low frequencies to bounce around the room uncontrolled, with the result usually sounding unacceptably boxy and boomy. Carpet can be useful as a tough, protective wall covering, but only if it is placed in front of conventional broadband absorbers of suitable depth to control the lower frequencies. For this to work the carpet must be ‘breathable’ and allow air to flow through so that the mid- and low-frequency sounds can pass through to the absorbers behind. So woven, fabric-backed carpets can be used, but not rubber-backed types!

Eggboxes on the wall vaguely resemble quadratic diffusers, which is probably where this myth came from. However, the dimensions and the construction material are both completely inappropriate. Worse still, when placed on walls the eggs keep falling out! A really stupid idea that warrants no further discussion! Hugh Robjohns

Myth: Drums should always be recorded first, and vocals last.

Fact: It’s perhaps stretching a point to call this one a myth, since I’ve rarely seen it stated explicitly as positive advice, but it is an assumption that many people never question. If you stop to think about it, though, there’s no very compelling reason why a particular order of doing things should be set in stone. In fact, it could be argued that this convention is a historical survival from the days when track counts were very restrictive and bouncing was often necessary.

Since the vocals are the most important part of a typical pop mix, the engineer would naturally want to have them isolated on their own track rather than bounced down, which meant recording them last of all. Conversely, multitracked drums took up a lot of tape real estate and, as a relatively continuous and unvarying element of the song, could be bounced more successfully than lead instruments.

Where an arrangement is being laid down track by track, it does sometimes make sense to start with the drums and rhythm parts, but this is by no means always true. I’m sure we’ve all had the experience of laboriously programming a sequenced drum part, or tracking down what we thought was the perfect sampled loop, only to pick up a guitar or sit down at the piano and discover that our rhythm part doesn’t sit comfortably with the feel of the song after all. If a song is based around a featured instrument, riff or bass line, why not try laying that down first? Don’t be afraid that your drummer won’t be able to track his or her part on top of existing instruments: if they’re good at their job it won’t be a problem — but it will give them the opportunity to respond creatively to variations and dynamic changes in those parts.

The convention of tracking vocals last is equally arbitrary, and now that we’re free from the restrictions imposed by four-track tape, makes even less sense. It leads inexorably to a situation where your singer is forced to perform all of his or her vocal parts within an ever-narrowing window of time at the end of the sessions: a recipe for stress, loss of judgement and, ultimately, loss of voice. It also presents the disastrous temptation of leaving the lyrics unwritten until the night before said vocal sessions!

Far better, surely, to insist that songs be properly finished before they’re recorded, and to track each song’s lead vocal as soon as possible, when the song is fresh and the band are still excited by it. And better, surely, for other parts to be responding to and complementing the vocal performance, than for the singer to have to struggle to squeeze his or her lines into a mess of instrumental overdubs. After all, Elvis Presley and Frank Sinatra insisted on recording their vocals not only live with their backing musicians, but in the same room. Between them, they achieved modest success. Sam Inglis

Myth: Frequency response charts tell you how a piece of equipment will sound.

Fact: The frequency response is only one — albeit important — aspect of an audio device. It gives some indication of the tonal character, but there are many other technical parameters that also contribute significantly. These include the total harmonic (and non-harmonic) distortions, signal-to-noise ratio, phase response and group delay, crosstalk, and much more besides.

In the case of loudspeakers and microphones, the published frequency response often only reveals the on-axis response. However, the actual sound heard is that obtained from (or reproduced in) all directions. Another important aspect with loudspeakers, in particular, is their ability to store energy (mainly in the cabinet) and release it slowly over time. This can cause low-frequency notes to boom and smear, and is revealed in a ‘waterfall plot’. Hugh Robjohns

Myth: Digital audio has steps.

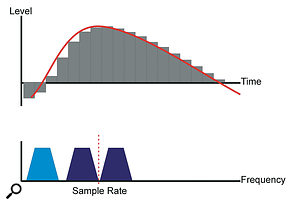

Although a digital system represents audio internally as a series of discrete, ‘stepped’ values, the process of D-A conversion recreates the continuous analogue signal that was originally recorded. Fact: This myth arises from overly simplistic explanations of digital audio technology. The amplitude is quantised to produce discrete amplitude values, often shown as horizontal level steps. However, such a simple design would introduce non-linearities which would be completely unacceptable in a high-quality audio system. Instead, ‘dithering’ is employed to introduce random (and hence noise-like) variations in the encoded audio level which completely negates the quantising steps, delivering a totally linear system with a fixed noise floor, the latter being at the equivalent level of one quantising step, or around -93dBFS for a 16-bit system.

Although a digital system represents audio internally as a series of discrete, ‘stepped’ values, the process of D-A conversion recreates the continuous analogue signal that was originally recorded. Fact: This myth arises from overly simplistic explanations of digital audio technology. The amplitude is quantised to produce discrete amplitude values, often shown as horizontal level steps. However, such a simple design would introduce non-linearities which would be completely unacceptable in a high-quality audio system. Instead, ‘dithering’ is employed to introduce random (and hence noise-like) variations in the encoded audio level which completely negates the quantising steps, delivering a totally linear system with a fixed noise floor, the latter being at the equivalent level of one quantising step, or around -93dBFS for a 16-bit system.

There are no steps in the time domain either, and where they are illustrated as holding periods between samples, they actually represent unfiltered modulation products (called images) which exist outside the wanted audio band as a by-product of the sampling process. The reconstruction filter which forms the final stage of all D-A converters removes these images and reproduces the expected smooth, continuous analogue audio signal. Hugh Robjohns

Myth: Digital audio interfaces can provide ‘zero-latency’ monitoring.

Fact: When we monitor audio that is being recorded to a computer, or generated by one in response to MIDI input, there is a delay between input and output. This is a product of multiple factors. Usually, the most important of these is the requirement that, once sampled at the input, the audio has to be stored in a buffer before being written to a storage medium. Audio being read from a hard drive is likewise buffered on its way to the soundcard’s outputs.

We can control the delay by reducing the size of the buffer, but this places increasing strain on the computer’s processor, tending to reduce performance.

There is no way to eliminate buffering delays from sources such as soft synths that are generated by the computer, but most digital audio interfaces now offer an alternative monitoring path for audio that originates in the outside world. Typically, a separate virtual mixer application is used to route signals directly from the soundcard inputs to its outputs, as well as to the application that is recording your audio. It is this direct path that is monitored, and by this means, the computer’s input and output buffers are bypassed. This significantly reduces monitoring latency for these signals, but it is not, as is often claimed, ‘zero-latency monitoring’.

Whether or not an audio interface supports direct monitoring of this kind, the monitored audio still has to be converted from analogue to digital and back again before it can be passed to your headphones, and it is not possible for either conversion stage to happen instantaneously. The combined delay due to A-D and D-A conversion might only be a millisecond or two, but it is nevertheless a delay (and even the direct monitoring paths in audio interfaces often introduce a small amount of further delay). Although a latency of a few milliseconds might not be detectable directly, that doesn’t necessarily make it irrelevant. For example, if there is significant spill from headphones or other monitoring sources into instrument mics, a delay of this order can be enough to cause problematic comb filtering in the recorded audio. Comb filtering between the monitored and direct sound leaking into headphones can also affect the engineer’s judgement when choosing and positioning microphones. Sam Inglis

Historical Myths

Myth: Georg Neumann invented the capacitor microphone.  The Neumann CMV3, sometimes called the ‘Hitler mic’, was not the world’s first commercially available capacitor microphone; nor was it invented at Hitler’s behest, since its introduction pre-dated his rise to power.

The Neumann CMV3, sometimes called the ‘Hitler mic’, was not the world’s first commercially available capacitor microphone; nor was it invented at Hitler’s behest, since its introduction pre-dated his rise to power.

Fact: The idea of using a variable capacitor to convert sound pressure variations into electrical signals was patented by Bell Labs in 1920, and the first commercially available capacitor (condenser) microphone was produced by Western Electric in 1922. WE’s 394 Condenser Transmitter design was licensed to other manufacturers worldwide — including AEG in Germany, where a young engineer named Georg Neumann happened to work. After a brief period of popularity in its native USA, the technology was eclipsed by then-new ribbon microphones, and there would never again be a major American manufacturer of capacitor microphones. However, Neumann and his colleagues continued to improve and refine the capacitor microphone, and in 1928, introduced the famous CMV3 ‘bottle’ mic. The rest, as they say, is history.

Myth: The length of a CD was fixed at 74 minutes so that Beethoven’s Ninth Symphony would fit on one disc.

The story that Sony Vice-President Norio Ohga insisted on the new medium being able to accommodate Wilhelm Furtwängler’s reading of Beethoven’s Ninth — at the time, the longest recorded performance of the piece — has passed not only into legend, but into many official histories. However, according to former Philips researcher Kees Immink, the 120mm diameter and 74-minute running time of the CD were actually the result of undignified horse-trading between Sony and Philips, whose relationship as co-developers of the format was sometimes rocky. Until quite late in the development process, the disc was to have been 115mm in diameter, but this would have given Philips a competitive advantage, as their subsidiary Polygram already had a plant set up to produce 115mm discs. To level the playing field, Ohga insisted on a late change in the size of the disc.

Myth: Today’s bedroom studios are hundreds of times more powerful than anything the Beatles had.

Fact: This is true if you take track counts as the only measure of how powerful a studio is. But is that really the most important thing? There are one or two of us who would happily trade unlimited audio and MIDI tracks for the chance to spend weeks or months in a large purpose-built recording space, with a team of the most talented engineers and technicians, guided by the incomparable George Martin. Sam Inglis