When you record multiple sources in one room, bleed is inevitable. And, used creatively, bleed can make good recordings even better...

Like many engineers, I like learning about recording history. I enjoy trying to piece together how the great records of the past were made, and considering what lessons I might learn and apply to my own work. And when I'm reading interviews and biographies, I'm struck by how often engineers who cut their recording teeth in the '60s enthuse about the positive qualities of microphone bleed. For many engineers today, that might seem confusing: many of us now view the leakage of sound from one instrument into another's microphone as a problem to be solved. To that end, we've developed myriad ways to isolate sounds and suppress spill. Solutions include: building the record in piecemeal fashion, by recording one track at a time; the old woollen-blanket treatment or the use of more advanced acoustic screens when recording; gates, expanders and other dynamics processors; spectral editing; and, more recently, intelligent 'unmixing' software that analyses frequency components in a recording in order to detangle the whole into its separate source sounds.

Separation Anxiety

But what caused this obsession with isolating sound sources? Why do we feel the need to minimise bleed in the first place? Well, there are a number of valid reasons, listed below.

Controlling The Content: Repairing individual off-key notes is almost impossible when all the other instruments' mics pick up the offending instrument too, and while solving timing issues can be possible [see this month's Session Notes, for example — Ed.], it's often not. Replacing one musician's entire part with a new one is harder still. By contrast, if you record every component in a piece of music in isolation, you're always able to change the content of each recording after the fact. If you lack confidence in the arrangement or the performance, or in your own ability to judge these things while focusing on the technical aspects of a recording, retaining this degree of control for the mix stage can obviously seem appealing.

Controlling The Mix Balance: In any mix, all sources are interdependent. Spill increases the degree of interdependence, and thus makes radical deviations from the acoustical balance of the recording room impossible. If you pursue a sound that's not considered a 'natural' one, you need sources that won't drag other instruments up, down, left or right with them as you ride the faders or tweak the pan pots. For example, a modern metal band cannot sound acoustically the way the producer will intend them to sound on the finished record. So it usually makes good sense to capture 'clean', isolated tracks for further processing and triggering.

Preventing Blurring: Problematic bleed (of which more later) distorts the natural sound and spatial positioning of instruments — the instruments are added to the mix at more than one position in time, due to the different distances from the source to each mic, and this 'blurs' or 'smears' their sound. Comb filtering, masking, loss of perspective, and conflicting or confusing stereo placement can all arise from such problematic bleed.

Coping With Problem Environments: If a recording room is too small or too lively, or if a stage is littered with loud monitor wedges, an engineer might choose to keep the negative outside influences from penetrating the recording by isolating the instruments from their surroundings as much as possible. (Of course this also eradicates any beneficial instrument bleed.)

Keeping Creative Options Open: More and more, pop music in particular is created in smaller studios, with the writing and recording processes often intertwined, and arrangements being tweaked well after mixing has begun. Having individual control over each component is both a consequence of and a precondition for that way of working.

Bleeding Benefits

Still, while there may be obvious reasons to strive for greater isolation, there can be down sides, too. One of these is that (even with the modern pop-style production mentioned above) you lose out on the benefits of the classic approach of first finishing the composition, arrangement and instrumentation of a song, then having a band or artists rehearse the piece, and then recording them playing it together, in one performance.

In my experience, when creating an album of original material with a band, the band usually becomes better at performing those songs once they're well into the promotional tour. Arrangements have matured by then, routines have been built, and individual performance details nailed down. If you record them in that state, all playing together in the same room, you'll often capture something that feels more 'real' than the album. It may lack some of the album's 'polish' and some specific sounds, but if the recording and performance are good, I find that I rely less on production tricks to create a record that sounds striking — to me and to an audience.

Frank Zappa famously assembled some of his records from various live recordings, and he wasn't alone in that. Nowadays, many electronic musicians attract plenty of YouTube views with hybrids of prepared studio production and live performance (check out Binkbeats, for instance: www.binkbeats.com/video). And even if you produce electronic music, performing your work live and recording it in one go can be beneficial — it can certainly provide you with a solid base, hopefully containing some moments of improvised magic, which you can still edit and add to without losing its one-off quality. Such an approach does require some planning of which sources you'll want to record acoustically, of course, but you can be sure your work will sound different, and that it will gain a more 'organic' quality.

Hopefully, I've persuaded you that capturing the energy of a single, unique performance can work well, and is at least worth trying if you've not attempted it before. But if capturing your performance involves using microphones, there's still that small matter of bleed to consider...

When & Why Is Bleed Problematic?

Any sound that doesn't add new information to a mix — in other words, something that is only a slightly out-of-time copy of existing information —has the potential to cause problems. By 'slightly' I mean any time interval up to about 5-10 milliseconds (which, given the speed of sound, equates to a distance of about 1.7 to 3.4 metres). This issue obviously arises when you mic closely spaced sources individually, but it's also inevitably encountered when you record anything in a small room.

When close-miking a drum kit, each mic picks up some bleed from other kit pieces, and it's impossible to align the resulting signals perfectly. Here, the sound from the snare will reach mics 1, 2 and 3 at different times, as would the sound from each tom. While you could align the resulting signals to give a tighter snare sound, you could not also align the tom sounds — so it will always be a compromise.A close-miked drum kit provides a great illustration of the problem posed by individually miking closely spaced sources. The snare is usually so close to the small tom that you can end up with practically the same snare sound in the tom mic as in the snare's own mic. As the tom is, similarly, picked up by both mics, it's impossible to time-align these leaked signals perfectly. You can align the snare or you can align the tom, but not both of them; and so the sound suffers from comb filtering. The shorter the distance between the two mics, the more bleed there is, and the more audible this filtering becomes. If you pan the two mics hard left and right the problem disappears, because your hearing system interprets small time differences between left and right as information about source localisation, not comb filtering. Alas, your joy will be short-lived, and for a couple of reasons. First, the localisation will interfere with the kit's stereo image; even if you could live with the snare on the left and the tom on the right, the spatial image would blur when adding the overheads. Second, when the conflicting sounds are summed to mono, the comb filtering returns. When you consider this, it's unsurprising that mix engineers working on multi-miked kits can find themselves spending so much time chasing their tails as they try to compensate for timing and phase issues! As a rule, hard panning closely spaced mics only really works when you don't need to add other mics — for example, when using an A-B stereo pair to record classical music.

When close-miking a drum kit, each mic picks up some bleed from other kit pieces, and it's impossible to align the resulting signals perfectly. Here, the sound from the snare will reach mics 1, 2 and 3 at different times, as would the sound from each tom. While you could align the resulting signals to give a tighter snare sound, you could not also align the tom sounds — so it will always be a compromise.A close-miked drum kit provides a great illustration of the problem posed by individually miking closely spaced sources. The snare is usually so close to the small tom that you can end up with practically the same snare sound in the tom mic as in the snare's own mic. As the tom is, similarly, picked up by both mics, it's impossible to time-align these leaked signals perfectly. You can align the snare or you can align the tom, but not both of them; and so the sound suffers from comb filtering. The shorter the distance between the two mics, the more bleed there is, and the more audible this filtering becomes. If you pan the two mics hard left and right the problem disappears, because your hearing system interprets small time differences between left and right as information about source localisation, not comb filtering. Alas, your joy will be short-lived, and for a couple of reasons. First, the localisation will interfere with the kit's stereo image; even if you could live with the snare on the left and the tom on the right, the spatial image would blur when adding the overheads. Second, when the conflicting sounds are summed to mono, the comb filtering returns. When you consider this, it's unsurprising that mix engineers working on multi-miked kits can find themselves spending so much time chasing their tails as they try to compensate for timing and phase issues! As a rule, hard panning closely spaced mics only really works when you don't need to add other mics — for example, when using an A-B stereo pair to record classical music.

No amount of time and phase alignment will extract you from the mess caused by small-room reflections, though. The only solution is to record the least amount of reflected sound possible — or rather, to increase the ratio of the wanted direct sound to the unwanted reflections. This means placing mics extremely close to the source, but sadly this doesn't do the recorded sound itself any favours! Such close placements mean instrument resonances originating close to the microphone will be strong, while those emanating from the opposite end of the instrument will be weak by comparison. This can be corrected to some extent using EQ and/or compression, to lower some of the higher components and bring up the weaker ones. You can also use distortion to stimulate frequency content you were unable to capture, and also clip any overly loud transients. But this brings us back to the original problem; the more dynamics processing you resort to, the more you'll tend to increase the level of the bleed, whether it be from instruments or reflections.

If the mics are relatively close to their sources, things get significantly better when the distance between the mics themselves is greater than about three metres, as the signals will be sufficiently separated in time to prevent obvious filtering when they combine. Of course, you can't always avoid placing mics closer to one another than that, and in this case you can mitigate the problem by choosing strongly directional mics. Ribbons and other true figure-of-eight microphones excel in this role, as they have a narrow pickup arc at both the front and rear, and a 'null', where the mic picks up nothing and thus 'rejects' sound, at each side. The mic can be positioned so that the front points at the instrument you wish to capture, the side-nulls aim at sounds you want to avoid, and the rear points at — hopefully — nothing much at all. But for some setups a cardioid-pattern mic, which has its 'dead spot' at 180 degrees (ie. to the rear), or a hypercardioid (which lies somewhere between the two) may be a more suitable option. If you can manage, through mic choice and distance, to suppress leakage by at least 10dB (over the entire frequency spectrum) in relation to the sound of interest in a particular microphone, you will at least be able to work with it.

The Whole Picture

While saving the day — preventing the worst-case scenario where slightly out-of-time copies of the same signal are combined — is important, this merely gives us an 'acceptable' results. And shouldn't we be aiming for better than that? The real fun and the most satisfying results begin when we start to embrace the up side of bleed, which is that it can be captured deliberately to add useful new information to the mix.

The sound of most instruments varies according to the listening angle. Depending on its physical construction, an instrument's resonances form different types of sound sources that can differ in directivity and orientation. An acoustic guitar, for example, consists of many monopole, dipole and more complex sources. The guitar body (which also acts as a boundary) further shapes the radiation characteristics of these sources, making for a very complex overall radiation pattern. Thus, at short distances there's no single optimum listening angle at which you can appreciate the instrument as a balanced-sounding whole.

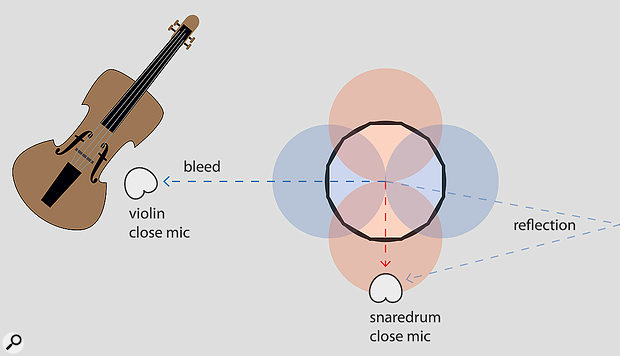

Figure 1: The red and blue coloured figures of eight represent two of the many modes of vibration in a snare drum. The snare drum's close mic can't capture all these vibrations equally well due to distance ratios and differences in directivity between modes. But since a part of the snare drum sound also ends up as bleed in the violin mic, and because of reflections that bounce sound back to the microphones, a more complete picture of the snare drum can be captured.

Figure 1: The red and blue coloured figures of eight represent two of the many modes of vibration in a snare drum. The snare drum's close mic can't capture all these vibrations equally well due to distance ratios and differences in directivity between modes. But since a part of the snare drum sound also ends up as bleed in the violin mic, and because of reflections that bounce sound back to the microphones, a more complete picture of the snare drum can be captured.

But when you move away from the instrument in a nice-sounding room, the acoustics help to complete the picture — eventually, almost all the sound the instrument produces will end up at the listening position in the form of reflections. The same applies to placing a single mic, in that close-miking from one angle basically removes most other angles from the equation. However, if the frequency components that radiate away from this mic end up in other microphones as bleed, this can be a good thing. Courtesy of the bleed, you've captured a more complete, even-sounding image of the instrument (see Figure 1).

Of course, as well as new information, the bleed contains information already picked up by the close mic. To prevent comb filtering, then, it's important to maintain a relatively large distance between the mics. You do have some freedom in positioning the mics: if you manage to keep the difference in arrival time between two mics somewhere in between 10 and 40 milliseconds, the signals will, perceptually, fuse into a single sound source. The first-arriving signal determines the source's localisation, but the bleed and reflections that arrive later can make a big contribution to the source's timbre. This principle is known as the Haas effect, and the type of signal determines how big the time gap can be in practice. Percussion will start to echo beyond 20ms of delay, while organs will sometimes still fuse into a single source at 100ms and beyond.

A typical orchestral concert venue provides a good example of why capturing reflected sound can be so useful — although the venue itself is a very large space, note that there are lots of reflective 'walls' around the orchestra itself. This helps the musicians to hear a more balanced sound from the orchestra.From these values, it's possible to calculate the optimum room size for the instruments in question. In small rooms, gaps in time between direct and reflected sound of 7ms (two metres) are not uncommon, and this causes audible filtering. In a church, the first reflection often arrives so late that it won't fuse with the source, and won't contribute much to its sound. A space roughly 10 meters in diameter with a high ceiling seems a good average for most styles of music. Incidentally, if you're wondering why classical concert halls are often bigger than this, look more closely: the area surrounding the instruments (apart from the audience area) is relatively close by. This provides the musicians in the orchestra with early reflections, so they can hear themselves. It also helps to deliver to the audience a more complete sound of the orchestra, because sound emanating from the back of the orchestra is reflected towards the audience in time to fuse with the direct sound.

A typical orchestral concert venue provides a good example of why capturing reflected sound can be so useful — although the venue itself is a very large space, note that there are lots of reflective 'walls' around the orchestra itself. This helps the musicians to hear a more balanced sound from the orchestra.From these values, it's possible to calculate the optimum room size for the instruments in question. In small rooms, gaps in time between direct and reflected sound of 7ms (two metres) are not uncommon, and this causes audible filtering. In a church, the first reflection often arrives so late that it won't fuse with the source, and won't contribute much to its sound. A space roughly 10 meters in diameter with a high ceiling seems a good average for most styles of music. Incidentally, if you're wondering why classical concert halls are often bigger than this, look more closely: the area surrounding the instruments (apart from the audience area) is relatively close by. This provides the musicians in the orchestra with early reflections, so they can hear themselves. It also helps to deliver to the audience a more complete sound of the orchestra, because sound emanating from the back of the orchestra is reflected towards the audience in time to fuse with the direct sound.

The Law Of Averages

As we've seen, room size and acoustics have a massive influence on how an instrument will sound, but what does the room actually sound like? If you've ever measured the frequency response of your control room, you'll probably have noticed that moving the measurement mic leads to radically different responses in the low end — your listening experience is actually not as unpleasant as it looks on the measurement plots! This is because you naturally form an average impression of the sound in the room: your head isn't locked into one place, and your hearing is capable of reducing some of the room's effects on the sound. Unfortunately, as soon as you use one microphone at a fixed position to record sound, this ability is lost; as you only hear one position, there's nothing to average out. Low frequencies in particular tend to sound less balanced in a recording than in real life, due to their susceptibility to boundary interference and room modes.

When measuring your monitor system, then, I recommend taking multiple measurements at and around the listening position, from which you can then calculate or estimate the average... and the same principle can be applied when recording instruments. In other words, when you capture an instrument's sound at multiple places in a room and mix the signals together, the low end will often sound more even than when using just one mic. Sure, you'll probably need some EQ to reduce frequencies that are pronounced in the room, but other than that, you can look at it as a purely statistical method — the more 'samples' you take, the less the outliers will contribute to the average, and the nearer the sum of the signals will be to the average (see Figure 2).

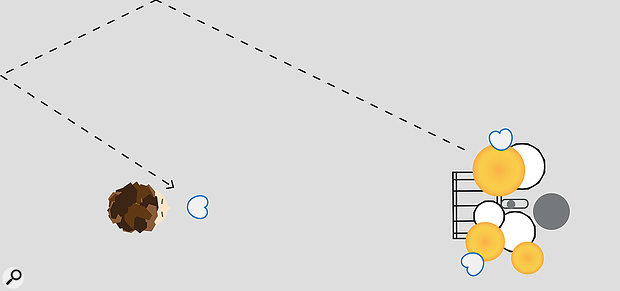

Figure 2: Each location in a room sounds different, especially at low frequencies. Using multiple microphones in the same room can help to even out the response. Here, the double bass has its own mic, but also leaks into the vocal and drum mics, effectively combining the room response at three locations in the mix. Of course the bass's own microphone will dominate the bass sound in the mixture, but at least the bleed can help to 'fill in' any major dips in the response.

Figure 2: Each location in a room sounds different, especially at low frequencies. Using multiple microphones in the same room can help to even out the response. Here, the double bass has its own mic, but also leaks into the vocal and drum mics, effectively combining the room response at three locations in the mix. Of course the bass's own microphone will dominate the bass sound in the mixture, but at least the bleed can help to 'fill in' any major dips in the response.

Dual-purpose Mics

Generally speaking, though, the fewer mics you need to capture a nicely balanced sound from an instrument, the more powerful the result will be. So if you can make a single microphone serve more than one purpose, that's a pure win; you get to record instruments at multiple positions in the room, while still using few mics in total. An obvious example of such a dual role is to use one judiciously placed mic to capture two instruments, but another less obvious one is to use a single mic to record one instrument's direct sound along with the reverb of another instrument. This saves you from having to use separate room mics to add depth to the mix, and reduces the number of instances where problematic bleed could creep in.

This approach requires a slightly different mindset when picking and positioning your mics. If you want a vocal mic to double as a drum room mic, for example, you'll not only have to pick a mic that sounds great for the voice, but also one suitable for the specific sound you wish to add to the drums. Thus, when working this way, you'll want to give much more consideration to the off-axis sound quality of your mic (see Figure 3).

Figure 3: The cardioid vocal microphone can double as a drum room mic. By aiming its dead spot at the drum kit, the direct drum sound is rejected as much as possible and mainly reflected drum sound is picked up — along with the vocal, of course. It's vital to check the balance carefully when setting up, as the singer's distance from the mic will determine how spacious the drums will sound in the final mix. If you get this to work, you save an extra room mic — a mic which in this case would mean two extra pathways of bleed and a consequent loss of definition.

Figure 3: The cardioid vocal microphone can double as a drum room mic. By aiming its dead spot at the drum kit, the direct drum sound is rejected as much as possible and mainly reflected drum sound is picked up — along with the vocal, of course. It's vital to check the balance carefully when setting up, as the singer's distance from the mic will determine how spacious the drums will sound in the final mix. If you get this to work, you save an extra room mic — a mic which in this case would mean two extra pathways of bleed and a consequent loss of definition.

I'd love to present you with a systematic approach for mic placement when recording multiple instruments in one room like this, but I'm afraid there are just too many variables to take into account. The different compositions, the musicians performing them, their instruments, and both their placement in the room and the unique acoustics of that room make up a unique cocktail, in which everything is interdependent. Any recording decision you make has to be evaluated in this context, as well as that of the musical style and the artistic intentions at play. Because this article is about the up sides of bleed, I'll leave out classical recording using only two microphones, and focus on recording a band in one room.

As a start, I'd recommend that you strive to capture the best possible balance using only one microphone per instrument (or small group of instruments). I guarantee you'll run into many issues at first — but rather than give up and retreat to your comfort-zone of close-miking everything, try to solve any problems by moving instruments and swapping out mics. When you're sure of the instrument positioning, you can check if you need more mics to deliver aspects of the sound you can hear in the recording room but which are so far lacking in the recording. Perhaps the drum mic is picking up plenty of cymbals and snare drum but the kick sounds weak. If the kick isn't heard firmly enough in some of the other mics, there'll be no getting around the need to add a dedicated kick mic, but while this extra mic will give you something useful, it will also detract from the depth and definition you had going before. The disadvantage can be mitigated by decreasing negative bleed (for instance by isolating the kick mic using screens or blankets), and by using a highly directional microphone very close to the kick.

When making decisions about what, if any, extra mics you need, your focus should remain holistic. Don't listen only to what the extra mic is adding to the source for which you intended it: pay close attention to its effect on all the other elements in the mix as well. This way of listening is actually easy when you have the 'base setup' coming over the monitors at all times — just periodically mute the extra mics and listen to what is happening to the greater whole. Coherence between elements and good spatial definition are always more important than the sound of any individual part.

Asking More Of Your Mics

Try asking this question routinely when setting up mics: "What else could this microphone add?" And if something is missing in the sound of a particular instrument then, rather than add another mic, why not try to coax it to the surface by processing the mics that are already set up? Compression can be great for this, as it attenuates dominant sounds and, via make‑up gain, brings up softer sounds. It can also cause some nice interactions: in the example of the vocal mic that doubles as a drum room mic, adding compression means less drum ambience when the vocalist is singing, and more at other times, since the louder vocal triggers the compressor. This can create the helpful impression that the drums sound massive, but without them clashing too much with the vocal. Interactions like these are one of the benefits of bleed: they can add interesting contrast and movement to the sound.

EQ & Phase

Once you get to the point of having a blend of microphones that feels good, you'll notice some irregularities in the low end that still need ironing out. Because low frequencies spread omnidirectionally in rooms, they end up in all the mics, and because of their long wavelength they haven't fully 'decoupled' as they arrive at the next microphone. So some audible filtering in the lows is inevitable, even in the best recording rooms, and with only a minimal mic setup. Luckily, this is usually quite easy to correct with EQ — as long as you heed the words of warning that follow! EQ changes not only the frequency balance but also the relative timing of frequency components (phase), and that means it can alter how microphones which pick up bleed add up, and what's more, it can alter things in unpredictable ways. An innocent-looking correction, such as high-pass filtering an instrument below its fundamental, can dramatically alter the sound of other instruments in the low end, even beyond the frequency range of the filter (see Figure 4).

Figure 4: Due to phase shift, EQ can have unpredictable effects when used on microphones containing bleed. Two sound sources (represented by the red and blue sine waves) are recorded using two microphones. The resulting bleed affects the balance between the sources when the microphones are added together, due to phase interaction (A+B). When you decide to get rid of the 50Hz bleed in microphone B by adding a 120Hz high-pass filter (bottom), the filter shifts the frequencies in time by different amounts, causing the 150Hz wave to be out of phase with the bleed in microphone A.

Figure 4: Due to phase shift, EQ can have unpredictable effects when used on microphones containing bleed. Two sound sources (represented by the red and blue sine waves) are recorded using two microphones. The resulting bleed affects the balance between the sources when the microphones are added together, due to phase interaction (A+B). When you decide to get rid of the 50Hz bleed in microphone B by adding a 120Hz high-pass filter (bottom), the filter shifts the frequencies in time by different amounts, causing the 150Hz wave to be out of phase with the bleed in microphone A.

There are a couple of ways to deal with this. The first is not to use EQ on individual mics, but only on the mix as a whole. That way, all the signals are subject to the same phase shift, so their relative phase relationships remain intact. This works like a charm when the instruments already sound balanced and the mix just needs a little polishing. But when an individual instrument doesn't sound how it should (for instance, because its mic is close and over-emphasises the low end due to the proximity effect) you have to EQ that individual channel. It's then a matter of checking carefully how the overall summing of microphones is affected by the EQ.

The first thing to check is the channel's polarity (often labelled 'phase'): in which of the two settings is the sound fuller and better balanced? When it leaves something to be desired in both settings, you could try an all-pass filter to add more phase shift at low frequencies without actually boosting or cutting at any frequency, and see if that improves matters (see Figure 5). Or, if this doesn't work and the blend just sounds worse than before the EQ, you could opt for a linear-phase EQ, which, as the name implies, boosts/cuts frequencies without changing the phase (linear-phase EQs do have other down sides, but these pale into insignificance next to the benefits of better summing of low frequencies.)

Figure 5: An all-pass filter allows you to alter the relative timing of a signal's frequency components, without affecting their amplitude. The two blue curves represent the effect of changing the filter frequency on the phase response. In situations where merely inverting a signal's polarity or moving a microphone won't lead to better summing between signals, such a filter can help to avoid worst-case scenarios. It is impossible (and unnecessary) to perfectly align all frequency components between an instrument and its bleed, but as long as you manage to prevent any major holes or bumps from occurring in the low frequencies, you'll be fine.

Figure 5: An all-pass filter allows you to alter the relative timing of a signal's frequency components, without affecting their amplitude. The two blue curves represent the effect of changing the filter frequency on the phase response. In situations where merely inverting a signal's polarity or moving a microphone won't lead to better summing between signals, such a filter can help to avoid worst-case scenarios. It is impossible (and unnecessary) to perfectly align all frequency components between an instrument and its bleed, but as long as you manage to prevent any major holes or bumps from occurring in the low frequencies, you'll be fine.

While getting the phase relations between mics right does involve some conscious thought, the complexity of the situation means there's also a good deal of luck involved. One part of an instrument can sound fine while another part sounds off, and simply moving the mics slightly can make quite a difference, without any rational planning involved. It's just a matter of taking a chance and listening carefully to hear whether things improve at a slightly different position. Should you find out after recording that the mics aren't summing well, you can also try to shift a problematic track slightly in time. A 5ms shift is hardly noticeable as a difference in musical timing, yet it can make a great deal of difference in the summing or cancellation of low/low‑mid frequencies. Your goal is not to perfectly align the signals in time — this is impossible and your attempts will only lead to comb filtering — but rather to 'randomise' their phase interaction by increasing the time difference between them.

Decorrelation: The Reverb Trick

When seeking to randomise the relationship between tracks, though, you can go beyond simply shifting tracks in time. Reverb, in particular, is a fantastic tool for 'shuffling' a sound in time while preserving its frequency content. The time-randomising aspect of reverb is what makes it so useful: you break the phase connection between two similar signals when you send one through a reverberator, so any comb filtering of the signals is eradicated. An obvious down side is that reverb also tends to make the sound appear more distant, but when you're trying to create the illusion that an instrument was just a bit more present in the recording room, it can work wonders. Although the reverb helps you to recognise the source a bit better in the mix, you won't actually hear it as a source on its own. This works especially well when sound sources are positioned closely together, such as with a vocalist who also plays guitar, or when instruments consist of multiple sources, as with a drum kit.

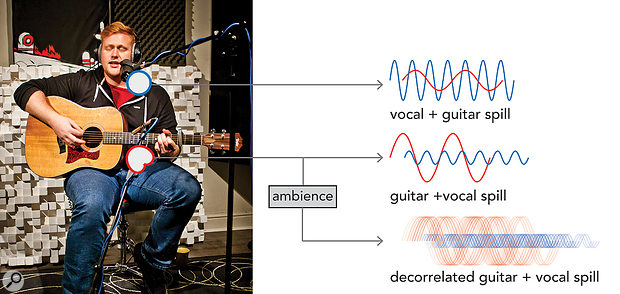

Figure 6: By not using the guitar microphone directly in the mix, but only via a reverb, you can prevent its spill from colouring the vocal sound. The reverb makes it seem as if the guitar was a bit fuller-sounding and louder in the room, but it's the vocal mic that is responsible for the main guitar sound and placement. Should you still need a bit of the direct guitar sound in the mix, try to EQ it in such a way that any negative side effects it has on the vocal are kept to a minimum. The guitar mic doesn't need to sound like a nice guitar on its own — I sometimes take out a lot of high frequencies in the guitar mic to make it add to the vocal, but then I send the pre-EQ signal to the reverb, giving the impression of a brighter guitar.

Figure 6: By not using the guitar microphone directly in the mix, but only via a reverb, you can prevent its spill from colouring the vocal sound. The reverb makes it seem as if the guitar was a bit fuller-sounding and louder in the room, but it's the vocal mic that is responsible for the main guitar sound and placement. Should you still need a bit of the direct guitar sound in the mix, try to EQ it in such a way that any negative side effects it has on the vocal are kept to a minimum. The guitar mic doesn't need to sound like a nice guitar on its own — I sometimes take out a lot of high frequencies in the guitar mic to make it add to the vocal, but then I send the pre-EQ signal to the reverb, giving the impression of a brighter guitar.

The best way to do this is to record the most important source without compromise using a dedicated microphone, and make sure that this mic picks up as much of the other nearby sources as possible without degrading the main source. Also record the other sources with their own close mics, but don't use these directly in the mix. Instead, send these close mics to a short ambience reverb patch which mimics the sound of the recording room. This way, the signals from these multiple mics become decorrelated, and you can really add to the sound of the main source with minimal audible filtering and conflicts in placement. A precondition for this approach to work is that the musicians maintain a good acoustical balance, as drastic alterations are not possible (Figure 6 illustrates one possible application of this idea).

This method of using extra microphones purely to drive reverbs is also useful to build a more complete, three-dimensional image of an instrument in a small recording room. In that situation, placing the mic at a distance will immediately bring out the negative qualities of the room. An alternative is to record the instrument up close at different positions and use some of these mics as reverb sends, so you can improve the instrument's overall balance. For instance, when recording a double bass, you could place mics near the bridge, the neck and the back of the instrument. If you then use the direct sound from the microphone near the bridge, but use the others to drive an ambience reverb, you can bypass some of the negative effects of the small room, while still capturing a full-sounding image of the bass.

This way of working somewhat mimics the use of screens to increase source separation when recording in a big studio. The screens mainly help to break up direct paths between microphones rather than providing true isolation. Yes, the sound gets around them thanks to reflections, but it takes the sound a bit longer to reach the mic than if the screens were removed. This delays and shuffles the bleed in time, much as the reverb does in the previous example. To me, this way of recording represents the ultimate compromise when crafting pop music together in a room, as you can still sense that a real event is taking place. You feel the tension and concentration in the players. But, at the same time, it gives you plenty of options to get creative behind the mixing board.

About The Author

Wessel Oltheten works as a mix and mastering engineer in his own Spoor 14 studio.

Wessel Oltheten works as a mix and mastering engineer in his own Spoor 14 studio.

He also records on location, teaches audio engineering at the Utrecht University of the Arts , and is the author of Mixing With Impact, a book on mixing that's available worldwide through Focal Press.